(Update: This post is continued in Tuning #1, An Instance of Success, and Schlemiel, You’re Fired!)

In my last post, I talked about my use of AppEngine, my boosting of AppEngine as a business platform, and some of the changes as it leaves preview. But I left out the big change, the changes to pricing.

Beat Me With Your Billing Stick

The price changes were announced a few months ago, and I remember an outcry then. I just ignored it, I figured that hey, it wont be so bad, my app doesn’t do much, it’ll be cool. But now Google has released a comparison tool in the AppEngine billing history page, so you can see what you would be billed right now if the new system was already in place.

Here’s the output of my billing comparison for Syyncc, for 1 day:

| 2011-09-01 12:31:11 | Usage Report for 2011-08-29 | $0.51 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Estimated Charges Under New PricingThe charges below are estimates of what you would be paying once App Engine’s new pricing model goes live. The amounts shown below are for your information only, they are not being charged and therefore do not affect your balance. If you would like to optmize your application to reduce your costs in the future, make sure to read our Optimization Article. If you have any additional questions or concerns, please contact us at: appengine_updated_pricing@google.com. Frontend Instance Hour costs reflect a 50% price reduction active until November 20th, 2011.

* Note this total does not take into account the minimum per-application charge in the new pricing model. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

So what’s the take home message here?

Old price for one day: $0.51

New price for one day: $49.92

ie: my pricing has increased by two orders of magnitude.

And, that’s a pretty cheap day. Many other days I looked at were over $60 under the new scheme.

So, instead of being in the vicinity of $200/year, I’m looking at $20,000/year.

Is it time to panic?

Not just yet. You see, there’s some interesting stuff in those numbers.

Firstly, most stuff has stayed the same. The bandwidth costs have stayed negligible. There are lots of zeroes where there were zeroes before.

In fact there are only two numbers of concern. Admitedly they are *very* worrying, but still, only two.

Frontend Instance Hours

This is one I was expecting, one that’s been getting a lot of press. Google AppEngine has removed CPU based billing and replaced it with Instance based billing. CPU based billing meant you only paid while your app was doing work. Instance based billing means you pay for work your app does, and time that the app is waiting for something to happen, and for the amount of idle capacity being held ready in reserve for your app.

It turns out that my app was using 11.39 CPU Hours per day, and 231.13 Instance hours per day. So that change is somewhat bad for me!

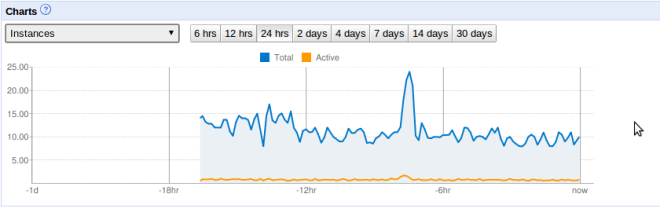

Here’s a picture:

The orange line is active instances (ie: actually in use). The blue line is total instances (ie: adding in idle instances waiting around to do something). While the total instances seems to average somewhere between 10 and 15 at any time, the active instances looks to average less than 1. ie: there’s a lot of wasted capacity.

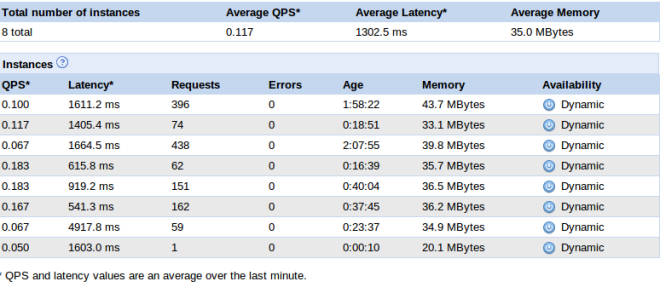

Here’s some more detail, from the AppEngine console’s Instances page:

From this page I can see Syyncc is servicing roughly one query per second. That’s like one person hitting a web page per second. A decent load, but not a killer. However, those instances are serious overkill for a load like that!

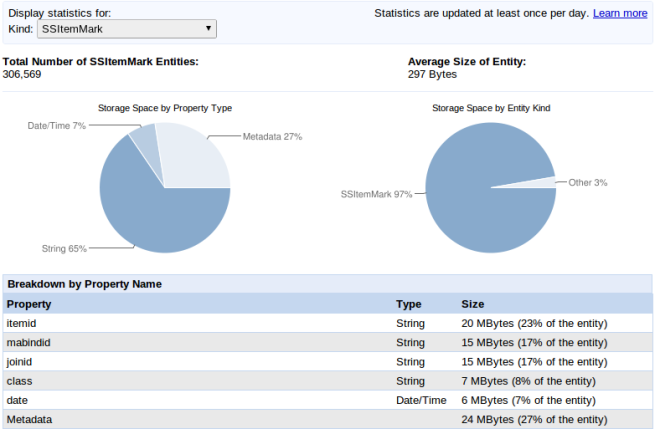

Where does all that load come from? Let’s look at the current load:

I was worried that the Rudimentary Google+ API that I put up a little while back was causing the load, but looking at this, it’s not a big deal (it’s /gpapi). The real requests are from /ssprocessmonitor (a piece of Syyncc’s monitoring of social networks which evidently runs every 2 seconds at the moment, for over a second per run), and socialsyncprocessing2, the “main loop” of syyncc which kicks off all other tasks, which runs as a cron job once every 2 mins, but runs for over 20 seconds at a time! Also, /ssweb-fbevents is worth a look, running on average over once per minute; its job is to receive incoming notifications from Facebook that a user has made a new post or comment. It’s not too bad though.

This fairly clearly tells me what’s using the most CPU time, but it’s not clear to me what is causing such a high amount of idle instances to stay running.

I guess the question really is, what is an instance? A good place to start is http://code.google.com/appengine/docs/adminconsole/instances.html . Its sort of informative, although a little brief in truth. Incidentally, this:

“This scaling helps ensure that all of your application’s current instances are being used to optimal efficiency and cost effectiveness.”

made me say “orly???”

Anyway, the gist seems to be that more instances start when tasks (ie: some kind of request to a url resource) seem to be waiting too long in existing instance queues. So the key to me bringing the instance cost down is to figure out how to reduce that wait time, I guess. Or, maybe there’s some more direct control I can take of the instance management?

Reading the google doco on this, http://code.google.com/appengine/docs/adminconsole/performancesettings.html, there are indeed two settings I can use. Firstly, I can set “Maximum number of idle instances”, which stops the app using more than this number of idle instances in anticipation of future work. It looks to me like I could set that to 1 or 2, and that would be a vast improvement on, say, 10 which the default “automatic” settings seems to be deciding on. Secondly, Minimum Pending Latency (which seems to be what causes the infrastructure to claim more instances) could be a lot higher. Remember that most of Syync’s load comes from itself, not from external users. So, being less responsive should be totally acceptable (no one can see that anyway). So, huge potential here, for cutting the cost down without a single code change.

If code changes are needed, perhaps I could write Syyncc to kick off less parallel tasks? Looking at this code in /socialsyncprocessing2 which runs every 2 mins:

monitors = SSMonitorBase.GetAllOverdueMonitors(50, 0)

if monitors:

for monitor in monitors:

logging.debug("Enqueuing task for monitor=%s" % (monitor.key()))

# See SSMonitorProcessor.py for ssprocessmonitor handler

taskqueue.add(url='/ssprocessmonitor', params={'key': monitor.key()})

What’s that doing? Well, it’s grabbing 50 monitors (ie: things that monitor one social network for one user) that are overdue to be checked, and it’s scheduling an AppEngine task to check each of them (that’s what /ssprocessmonitor does). There’s something bad about this loop in that it instrinsically doesn’t scale (once there are too many users to handle with 50 checks/2 seconds, syyncc will slow down).

But what’s worse, is that once every 2 mins, Syyncc says to AppEngine “Here, quick, do these 50 things RIGHT NOW”. AppEngine’s default rules are all around being a webserver, so it’s saying, crucially, if any tasks have to wait too long (ie: their pending latency gets too high), then kick off another instance to help service them. How long is the “automatic” minimum pending latency? I don’t know, but it can’t be much more than a second or two, going on the latency settings in my instances data.

So AppEngine kicks off an new instance, then another, but there are still heaps of tasks waiting, waiting! Oh no! Kick off more! And I’m guessing for syync it hits the 10 to 15 instances mark before it gets through all those tasks. Phew!

And then, nothing happens for another minute or so. Meanwhile, all those instances just sit there. It probably starts thinking about timing them out, shutting them down, when /ssprocessmonitor runs again and says “Here, quick, do these 50 things RIGHT NOW” again! And so on. And so the very high number of idle instances.

So changing my code to not schedule a batch of concurrent work every couple of minutes would be a good thing!

Long story short here, I’ve got options. I can probably fix this instances issue. I’ll try some stuff, and blog about the results. Stay tuned.

Meanwhile, there’s a much, much bigger issue:

Datastore Reads

What’s a datastore read? It seems to mean any time you touch, or cause AppEngine to touch, an object in the apps’ datastore. Mundane stuff. But let’s look at what the new billing scheme says:

| Datastore Reads: $0.70/Million Ops |

59.06 | 0.05 | 59.01 | $41.31 |

The first three numbers are Used, Free and Billable, in units of millions of ops, and the last is the price (Billable * $0.70/Million Ops). Per day. Ouch!

Now this never used to be part of the quota, and in fact I know of no other way to even know your stats here except for the new billing tool. So, it’s a total surprise, and it’s very difficult to find out much about it.

First up, reality check: My app is doing something in the vicinity of 60 Million operations on the datastore per day. REALLY!!??!?!?! But actually, it’s probably true.

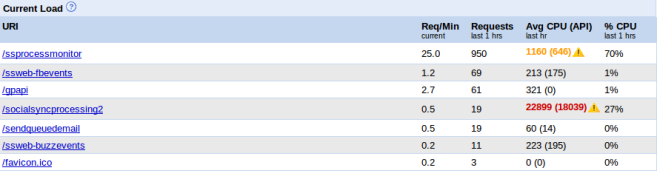

Let’s think about Syyncc’s datastore. There are a bunch of different entity types in there, some with around 3000 entities in them. And then, there is SSItemMark.

An Item Mark (which is what SSItemMark entities are) is a relationship between one post in one social network (say Facebook) and another in another network (Google+), or between a comment in one network and a comment in another. When Syyncc cross-posts or cross-comments, it saves an Item Mark to link the original post/comment and the new one Syyncc has created. If you have 3 networks set up, Syyncc creates 2 marks, if you have 4, Syyncc creates 3 marks.

Syyncc has no method of deleting old marks, I’ve never bothered with it. I’m below the paid quota for storage, and it just hasn’t mattered till now, why not just hang onto them?

So, there are currently over 300,000 of them, as is shown above.

But 300,000 is a lot less than 60,000,000. What’s the deal?

Maybe something I’m doing with my queries is touching a goodly subset of these objects every time I do a query. It could be something in /ssprocessmonitor, which is happening once every couple of seconds. If that were true, we’d have:

(K1 * 300,000) objects touched * K2 queries / 2 seconds * 60 sec/min * 60 min/hr * 24 hr/day = K1 * K2 * 12,960,000,000 objects touched per day.

Say there’s only one query responsible, so K2 == 1, then K1 is approx 0.0046

ie: I only have to be touching a small subset of those records every couple of seconds for things to get crazily out of control.

OTOH, inspecting the code, it doesn’t look like this is the case. I use fetches on the Item Marks, but they should only ever return 1 object at a time, and I never iterate them. It’s possible that my use of fetches behaves differently to what I expect, but I don’t think so. I don’t think SSItemMark is causing this issue.

Let’s look at the fetch() call, because this is the one that’s got the potential to be the culprit. I use them extensively to get data, and it turns out there’s a big gotcha!

http://code.google.com/appengine/docs/python/datastore/queryclass.html#Query_fetch

fetch(limit, offset=0) Executes the query, then returns the results. The limit and offset arguments control how many results are fetched from the datastore, and how many are returned by the fetch() method:

- The datastore fetches offset + limit results to the application. The first offset results are not skipped by the datastore itself.

- The fetch() method skips the first offset results, then returns the rest (limit results).

- The query has performance characteristics that correspond linearly with the offset amount plus the limit.

Arguments:

limit

- The number of results to return. Fewer than limit results may be returned if not enough results are available that meet the criteria.

limit is a required argument. To get every result from a query when the number of results is unknown, use the Query object as an iterable instead of using the fetch() method.

offset

- The number of results to skip.

config

- A configuration object for the API call.

The return value is a list of model instances or keys, possibly an empty list.

So, paging through records using limit and offset is incredibly prone to blowing out the number of datastore reads; the offset doesn’t mean you start somewhere in the list, it means you skip (ie: visit and ignore) every object before reaching the offset. It’s easy to see how this could lead to the 60,000,000 data store reads/day that I’m experiencing.

Another look at the code for /socialsyncprocessing2 gives this:

class SocialSyncProcessingHandler2(webapp.RequestHandler):

def get(self):

# any records with a null nextcheck are given one making them overdue

SSMonitorBase.SetAllNextChecks()

# ... and the rest ...

The way /socialsyncprocessing2 works, as we saw in the previous section, is to get up to 50 overdue monitors and process them. We know they’re overdue because there is a NextCheck field that has a date in it signifying when the monitor next needs checking. But sometimes, especially for new signups, the NextCheck is not set. So, I threw in SetAllNextChecks() to make sure there’s a value in all the monitors before they are checked. Wait, it checks all of them? Let’s look at that code:

def SetAllNextChecks(cls):

monitor_query = SSMonitorBase.all().filter("enabled", True)

offset = 0

monitors = monitor_query.fetch(10, offset)

while monitors and (len(monitors) > 0):

for monitor in monitors:

if not monitor.nextcheck:

monitor.nextcheck = datetime.today() - timedelta(days=5)

monitor.put()

offset += len(monitors)

monitors = monitor_query.fetch(10, offset)

SetAllNextChecks = classmethod(SetAllNextChecks)

There are about 1500 SSMonitorBase objects (ie: monitors). Most of these are enabled, let’s just assume they all are for now. The code above loops through them all, and any without a NextCheck set get a date in the past plugged in (which makes them overdue).

Ok, fine. Except, look at that evil loop. It uses fetch() to get 10 objects at a time, processes 10, then gets 10 more, until it gets through all 1500. And it does that by using amount and offset.

So, looking at the doco above, the loop will touch the first 10 objects. Then it’ll touch the first 10 again to get to the next 10 (so 20). Then it’ll touch the first 20 again to get to the 10 after that (that’s 30 more), and so on all the way to 1500. If the amount was 1 instead of 10, the formula N * (N-1) / 2 would give the number of accesses. With amount 10, it’s approx N * (N-1) / 20 .

1500 x (1500 -1) / 20 = 112425 datastore reads

Ok. That seems, you know, excessive.

Now consider that this happens every 2 minutes. That is, 30 times per hour, or 720 times per day.

112425 * 720 = 80,946,000 datastore reads per day.

Now that’s a few too many (what’s 20 million reads between friends?) but if we assume some of the monitors are not enabled, that’ll be dead on. It’s got to be the culprit.

That also tells me that it’s unlikely that anything else is doing excessive reading. This should account for the entire issue. Good news, no?

Now if I were to rewrite the above to just iterate on the query, and not use fetches, the number would be:

1500 * 720 = 1,008,000 datastore reads per day

which would be about 70 cents per day. Even better, it’d be an O(N) operation instead of O(N^2), so it’s not going to get wildly out of hand.

But even better than that, if I were to fix the rest of the code (particularly new user signup) so that this field is never None, I could dispense with this operation altogether. That’s the best solution, because frankly this operation is total crap. I could run it as a backstop once per day, maybe, but really I shouldn’t need it.

Wow, Solved?

Well, maybe. It’s looking good, isn’t it? If I can limit the number of idle instances to something really low, and if I can get rid of the expensive and wasteful SetAllNextChecks(), the billing should come all the way back down to where it is now. w00t!

But, I can’t be sure of that. I’ll go do it, and report back. Thanks for reading, and tune in next time to see how it goes…

Have a look at cursors too, they don’t suffer the performance problem of limit/offsets if you want to work gradually through a data set.

Yup, absolutely.

I would also consider using a pull queue for your task workload rather than normal push queues, that way you could use a cron to limit the number of tasks spun, up, and work through your offline processing in a more sequential fashion.

I’m thinking about that kind of thing. I originally built it the dumb way that I did so that it had a built in limit on how much work it would do. However, as my user base increases, I would actually like to take advantage of appengine’s platform and scale with it; I just don’t want it to blow out into crazy money.

Some kind of backend for the processing would be good though; the WordPress connection is crap because of timeouts that using a backend would solve I think.

A bigger architectural issue is mixing the engine with the website. The website is a classic low traffic website; its performance settings should reflect that (eg: low latency). The engine needs very different settings (eg: high latency). I really need to split them into two separate apps, probably using a REST interface between them.

The more I think about it, the more I think your processing approach probably suits backends and cron rather than normal frontend and task queues. I am not sure you really need to split them. I been doing the low latency low volume website for ages on appengine and have got that pretty efficient.

https://groups.google.com/d/msg/google-appengine/0tKkjM5xlc4/1bmSJx5huvwJ

Pull queues driven by cron, shouldn’t interfere with a front end. At least worth a try 😉

It would be easy to abstract the two and interpose an rpc/rest layer between them too.

[…] point7 Emlyn's Blog – Downshifting, Creating, Ranting « The Amazing Story Of AppEngine And The Two Orders Of Magnitude […]

Good post, thanks.

I have to figure out yet if the Url requests called by a task queue or a cron job are counted as user-facing requests.

Good call, I’ve been assuming they are all the same thing, but I could be wrong.

[…] Previous related posts: https://point7.wordpress.com/2011/09/03/the-amazing-story-of-appengine-and-the-two-orders-of-magnitud… and https://point7.wordpress.com/2011/09/04/appengine-tuning-1/ […]

My app has the exact same scenario as yours – I initiate ~250 URL Fetches every 15 minutes. If I allow multiple front-end instances to get created, all these fetches will occur in parallel, but I’d be paying a lot of money for all these mostly idle instances. My optimization centers around using one single backend that processes these URL fetches one at a time (totally acceptable for my app), by leasing them one at time from a pull queue. The pull queue is populated by random events in a front-end instances, and the back-end is triggered by a cron every 15 minutes to lease these URL Fetch tasks from the pull-queue. This way all my work gets done by a single backend that runs 24×7. I could easily change my cron to run once every hour instead of 15 minutes, and then my backend is running for free (just under 9 instance hours a day).

Another level of optimization is kicking off multiple URL Fetches using create_rpc() calls, so that my backend can do other things while the URL fetch completes (which, like in your case, can take several seconds to complete or timeout). With all this, I hope to stay under the free instance hour quota.

Ok, now those are the good oils, *thanks* for this comment! I’m about to post the conclusion to my initial deal-with-the-immediate-issue series of posts. After that, some more serious re-architecting will be warranted, because clearly there are better ways to do things, which are worth the effort. Your points here will contribute greatly to that.

[…] This is the concluding post in the series that begins with The Amazing Story of AppEngine and the Two Orders Of Magnitude. […]

[…] O’Regan has written a really fine article explaining in great detail his experiences tuning his application for the new pricing model. He has […]

Great article, thanks for this.

I was very encouraged to find this site. I wanted to thank you for this special read. I definitely savored every little bit of it and I have you bookmarked to check out new stuff you post.

loi

[…] upshot is here and here – it looks like a pro-tip is to avoid doing limit or offset queries, as they touch […]

I would like to thank you for the efforts you have made in writing this post. I am hoping the same best work from you in the future as well. In fact your creative writing abilities has inspired me to start my own Blog.

I wanted to take a moment to say thank you for writing and sharing this informative article. You make interesting points that I agree with in many ways. I’m really impressed with your writing technique.

Buy Wartrol

I appreciate your wp format, where did you get a hold of it?

Any great, cool, or memorable weblog URL to suggest in addition to this one?

Thank you!

Hello, after reading this amazing paragraph i am as well cheerful to share my

familiarity here with colleagues.